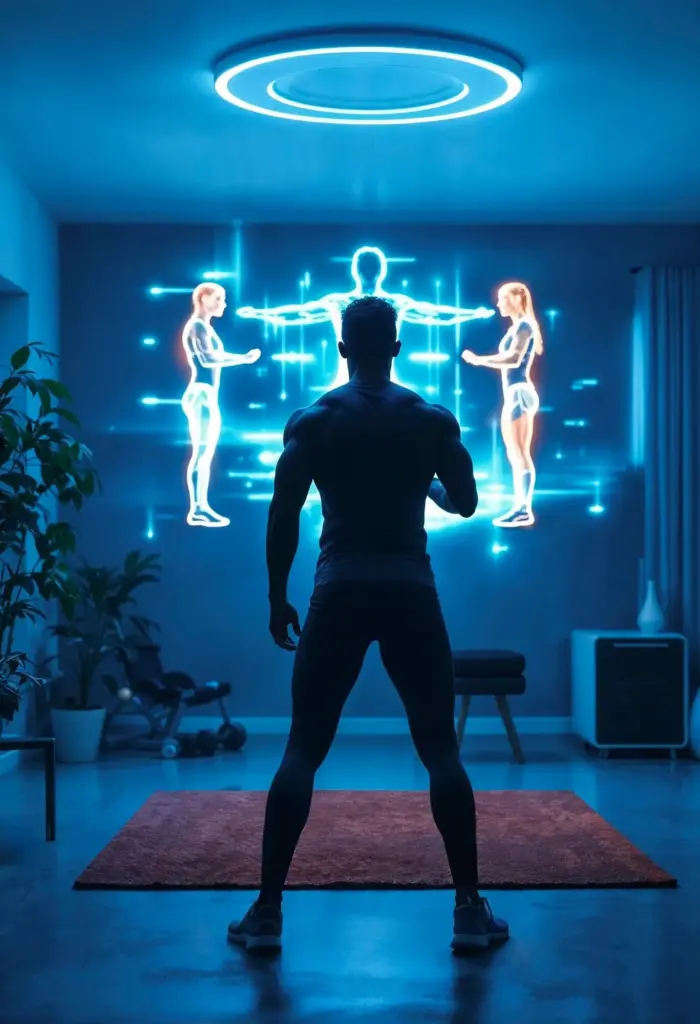

Moving Together: Human–Robot Co‑Motion in Minds and Mechanisms

Sensing Subsecond Cues and Turning Them Into Helpful Motion

Fluency depends on catching early signals: gaze shifts, hand acceleration profiles, muscle activation hints, and tiny force fluctuations that precede a decision. By fusing vision, wearable sensors, and interaction forces, a collaborator can shape trajectories that quietly prepare the workspace, stage tools, or lighten loads. That readiness reduces negotiation overhead, prevents awkward stalls, and lets humans stay immersed in meaningful actions instead of managing the robot’s next move.

From Fatigue and Friction to Confidence and Flow

Poor coordination adds cognitive load, causing hesitation and unnecessary corrections. Good co‑motion shortens dwell times, reduces grasp retries, and avoids collisions by predicting likely goals and presenting affordances at the right moment. Subtle alignment of velocities and contact stiffness makes handovers feel natural. Workers report lower perceived effort and higher control when assistance arrives slightly before it is needed, paired with clear signals that explain what will happen next.

A Shop‑Floor Anecdote That Changed Our Playbook

In a small assembly pilot, operators complained about a capable cobot that always felt late. We tweaked kinematics to stay inside the operator’s preferred reachable zone and added a half‑second anticipatory lift based on gaze direction. Suddenly, handovers clicked. Throughput rose without pushing speed limits, and, more importantly, conversations shifted from frustration to ideas for new tasks the system could quietly simplify next week.

Kinematic Design That Respects Bodies, Workspaces, and Uncertainty

Cognitive Models That Predict, Explain, and Negotiate Intent

Communication Channels: Gaze, Gesture, Voice, and Haptics Working Together

Case Studies and Measurable Outcomes From Real Work

Getting Started: Prototyping, Evaluation, and Responsible Rollout

Prototype Quickly, Learn Faster, Share Widely

Evaluate With Metrics People Actually Feel

From Pilot to Scale Without Losing Humanity

All Rights Reserved.